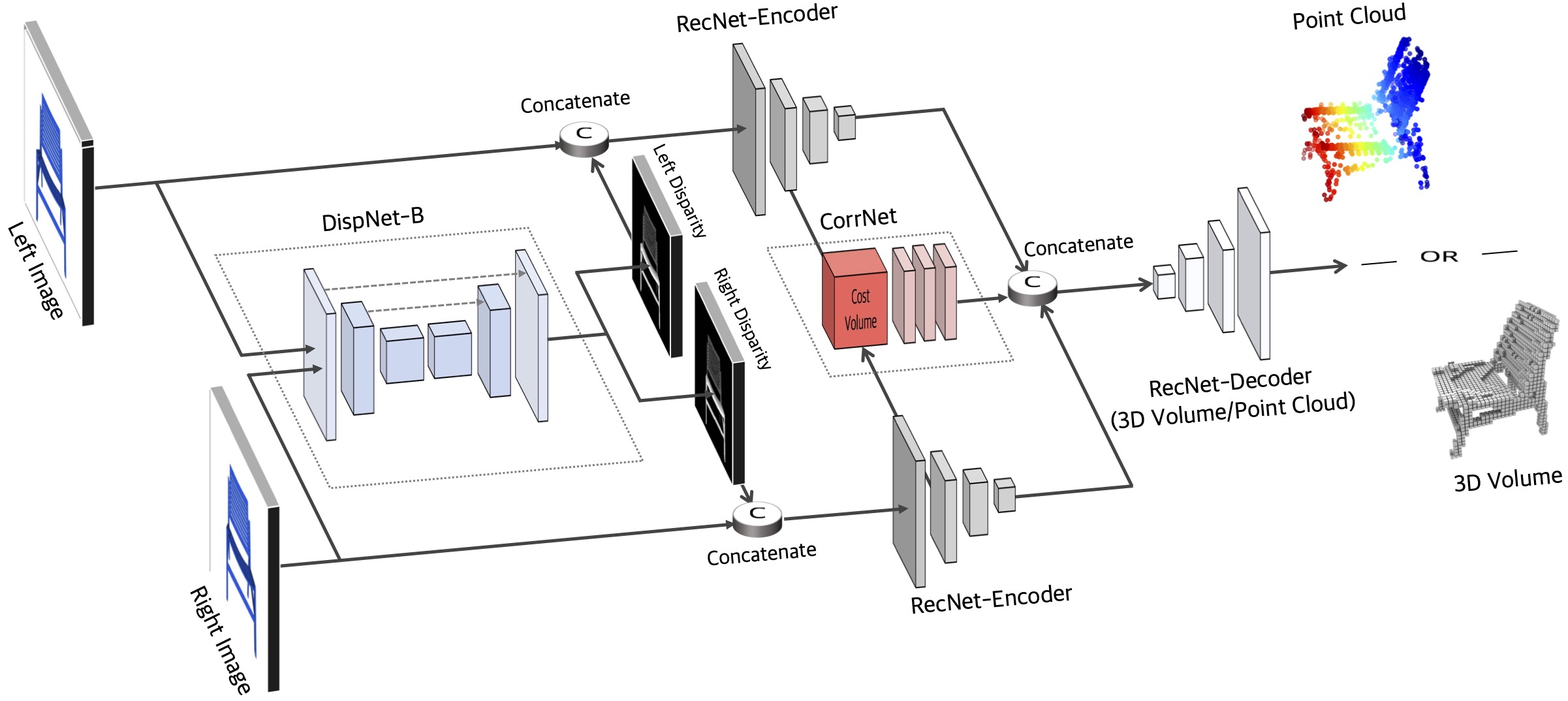

This repository contains the source code for the paper Toward 3D Object Reconstruction from Stereo Images.

Important Note: The source code is in the (Stereo2Voxel/Stereo2Point) branches of the repository.

@article{xie2021towards,

title={Toward 3D Object Reconstruction from Stereo Images},

author={Xie, Haozhe and

Tong, Xiaojun and

Yao, Hongxun and

Zhou, Shangchen and

Zhang, Shengping and

Sun, Wenxiu},

journal={Neurocomputing},

year={2021}

}

We use the StereoShapeNet dataset in our experiments, which is available below:

The pretrained models on StereoShapeNet are available as follows:

- Stereo2Voxel for StereoShapeNet (309 MB)

- Stereo2Point for StereoShapeNet (356 MB)

git clone https://github.com/hzxie/Stereo-3D-Reconstruction.git

cd Stereo-3D-Reconstruction

pip install -r requirements.txt

git checkout Stereo2Voxel

git checkout Stereo2Point

cd extensions/chamfer_dist

python setup.py install --user

You need to update the file path of the datasets:

__C.DATASETS.SHAPENET.LEFT_RENDERING_PATH = '/path/to/ShapeNetStereoRendering/%s/%s/render_%02d_l.png'

__C.DATASETS.SHAPENET.RIGHT_RENDERING_PATH = '/path/to/ShapeNetStereoRendering/%s/%s/render_%02d_r.png'

__C.DATASETS.SHAPENET.LEFT_DISP_PATH = '/path/to/ShapeNetStereoRendering/%s/%s/disp_%02d_l.exr'

__C.DATASETS.SHAPENET.RIGHT_DISP_PATH = '/path/to/ShapeNetStereoRendering/%s/%s/disp_%02d_r.exr'

__C.DATASETS.SHAPENET.VOLUME_PATH = '/path/to/ShapeNetVox32/%s/%s.mat'

To train GRNet, you can simply use the following command:

python3 runner.py

To test GRNet, you can use the following command:

python3 runner.py --test --weights=/path/to/pretrained/model.pth

This project is open sourced under MIT license.