-

Notifications

You must be signed in to change notification settings - Fork 0

Home

For more information about Ariel Space Challenge, please check out https://www.ariel-datachallenge.space/ and the Main branch description of this repo. Here we intend to give more information about overviews.

For more detailed information about what is being tried (and success/failed), please check the main branch Log_Files_Ariel.pdf.

This repo is not a single-branch repo, which means that each branch are independent of one another. This way, instead of creating a new repo for each, we preserve to the max extend certain important trials. Particularly, some branches starting with "dev" keywords are development channel that have not been merged into other "non-dev" branches either due to non-mergeable (i.e. requires resolve quite a lot of conflict we end up giving up resolving and retain it) because of some extreme changes, or because some checkpoints at earlier stages of main branch are to be preserved hence not initiated merging of branches.

Nonetheless, since the other branches are forked from the original Baseline solution provided by Mario Morvan, we do not alter the page's Markdown solution by anything. Particularly because most effort are put into working with the code than cleaning things up, hence they most of the time do not reflect what is on that branch. These changes will be discussed here instead of on each singular branch, although we do understand this might not ease your browsing of the pages. Hence the suggestion is to refer to this wiki before proceeding on with choosing a branch to explore more.

Furthermore, some branches are direct cloning of other branches, particularly cloning from the main_2 branch. This means there are lots of uncleaned files (that are not a requirement). We will state what are the main files here and discuss more in details where applicable. Of course, if in doubt, do refer to the Log_Files_Ariel.pdf on the main (master) branch. They contained the chronological changes and some ideas being implemented, which worth reading from bottom to top of the file.

The main branch contains the original idea. After reading about the science behind it, we thought that perhaps each individual wavelengths are independent of one another, because we are "just scanning the wavelength, not emitting wavelength". However, the idea that the wavelength might be dependent on each other on the emitter is not yet processed in our brain at that time, and we thought it might be easier to build 55 different single-target classification model than to build a single multi-target classification model. Although we eventually switch to the latter because we notice this isn't doing great, it is worth discussing about.

The first thing we do is to create separate csv files each contains their respective wavelength, and its target. At first, we thought the data might be huge (turns out not, because training data was about 35GB 5GB and split into 55 wavelength it's really less than 1GB) hence we create the csv files and upload them onto BigQuery to "flow from BigQuery". Of course, this have cost issue as well, so at the end we are reading from BigQuery, but reading the whole table from BigQuery, so that isn't really doing much work. We do use join operations on BigQuery especially because there are optional parameters for training that we store in another table and we join them onto one big table before downloading for training.

Why does this not do very good? If you notice, the target only varies for different AAAA's (i.e. different planets observed, coded as AAAA). With varying BB's and CC's (index of stellar spot noise instance observed and index of gaussian photon noise instance observed), the target does not change, since they represent only noise. This concludes why the model cannot learn well, as looking at different thing that gets to the same result (100 times!!!) is just going to confuse him.

This method of training was generally discarded. Although, at one point when we are to try out XGBoost (due to we didn't figure out how to have multi-target output for XGBoost), we resort to this method again. The branches are xgboost and xgboost-main-2 with different parameters and trying out different ways to implement XGBoost to the model. Ultimately, XGBoost do about as well as linear (Dense) model for single-target prediction, and the points does not improves a lot. The main entry point here is the XGBoost.ipynb files.

Note because there are some json files for access to GCP buckets and BigQuery. The projects are now deleted and not available. This includes all the data that was originally hosting in the page. Perhaps there might be Example data remnant that is left in the Github repo (can't remember whether they do) but generally we do not include it in Github due to file size.

We also thought that because the target is the same, why not merge them into one single row? Originally each row have 300 timesteps so after merging we have timesteps, and this just wasn't feasible to host in BigQuery so we hold it on csv files instead. And also, our training data reduces significantly, from 125600 to 1256. And to reduce the timesteps even more, we only take each of the 100 values in the midpoint of each 300 timesteps (i.e. [99:199] of the original [0:300]) so we have less columns. The training ends up better than any of the single-target representation, and it is the only single-target classification that surpasses the 9000 points barrier (the exact point wasn't recorded down) (the others are 7000-9000 but did not reach 9000).

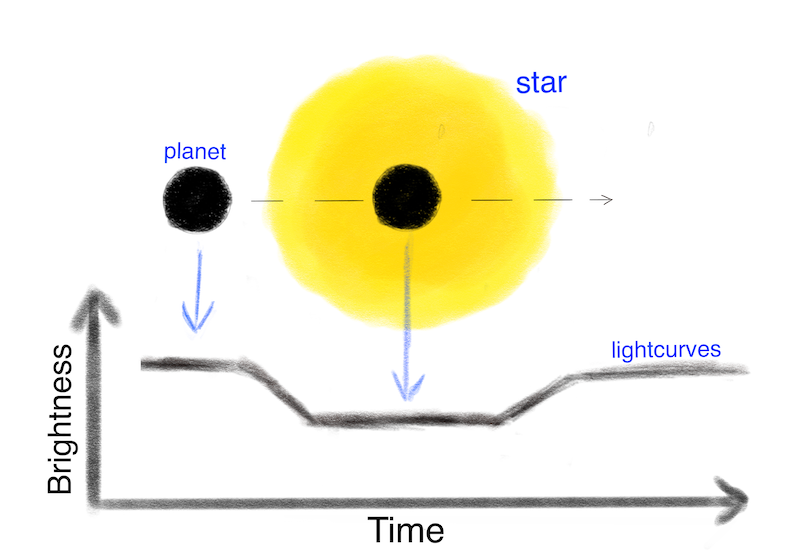

Why do we take the middle 100 points? It is thought that because of the dip in the middle and that part might be the most important. This is well done, and it later prove it is the most important part. However, this does not mean other parts are not important. In fact, only the part where we have an initial ramp (the first 30 or less time step) aren't accurately reflecting the relationship, the others provide quite interesting relationship although training with just them actually does not provide as best a transformation with the middle 100 values.

After which we try to do multi-label modelling, we found it difficult to find a function to flow files from directory in TensorFlow. At least, we aren't experienced enough to find the proper function (or there be a custom function that is not part of tf.Keras predefined functions to do this. Hence, we switch to use PyTorch as Baseline is already provided for us to make changes on top of it.

The main development branch here done by me and Rachel are main_2 and dev_11062021. Originally dev_11062021 was intended to merge into main_2, and perhaps forgotten or for unknown reason it wasn't merge, and until now afraid of information loss we let it stay that way. The main entry ponit is train_baseline.py. Other files like testing_our.ipynb and saved_fig.png are remnants of the garbage created during testing phase that have not been cleaned, and predictions are done with the walkthrough.ipynb.

The dev_2 branch are the original development branch of main_2, and retains the hyperparameter tuning using Wandb.ai parameters snapshot.

The others are dev_11_lstm where Rachel made a LSTM model changes and tried to train it but didn't returns good results. Similarly, despite LSTM trains quite well, GRU doesn't (in dev_11062021 branch). We also did some testing which eventually forms alt_lstm branch where we try modifying the LSTM such that they have multiple backbones (one LSTM and one CNN) and combine their individual outputs in the middle either via summation or torch.cat function (tried both), make some other changes such as to the optimizer tried AdamW, use amsgrad, different learning rate, and other things which you could check out more on the Log_Files_Ariel.pdf (if you manage to find it somehow since they aren't arranged properly) and they give quite good result, although because LSTM trains very long on CPU (well, 12 vCPU) we generally use a small neural units (128 units) than the original LSTM with 512 units of dual layers (both dual layers). The former trains for 1+ hours per epoch, the latter, with 20 vCPU training, takes 4.1 hours per epoch on an E2 instance on GCP (depending on CPU architecture, this time fluctuates a lot). We did not request for GPU access nor are the predictions required urgently so we take our time to train for a week (while other training occurs in parallel since we have several instances running at the same time).

Stacking and stacking_dev is getting their idea from classical machine learning (not Deep Learning) of Ensemble models. Perhaps this is not the correct way of doing stacking, but they do "stack" on top of, and besides each other. We have 6 models each focuses on 50 different timesteps training in parallel (well, some looping are done since we are not creating 6 VMs to train them and they don't take that long to train), with the worker VMs uploading their results to GCP (without intervention, of course), and master VMs downloading them after finish its loop training (by offsetting such that the worker VMs, which are weaker and takes longer to train one model, only trains 2 of the model, and the master, which are stronger, trains 4 of the models). Then, these are used to calculate the train errors, and the errors are concatenate as features to be passed onto training on the final model alongside the 300 timesteps (which could be omitted actually depending on how you engineer them, though we didn't try to omit them, sadly). This does not provide significant improvement to the original perhaps because all models are linear models (although the final model have slightly deeper architecture than the first layer models).

We also tried averaging where this is not machine learning. We assume that by averaging the output naïvely, we could get better result, if and only if some of the data points from these outputs are above the actual, and some are below. This proves out not really well as our average marks are biased (picking only the top to use as average and discard the lows), and generally they do not give better results after averaging.

The first branch that emerges from this is make_cnn_png_img which aims to create the images by merging all CC's together, stacking them on top of each other, so creating a image. The reason we use image is because we saw there are some characteristic lines that may well represent this. After training, both with and without any image augmentation techniques, which can be presented in branch cnn, tl_azure and extract_learning, we do notice they generally do not do as good as single-target modelling. Although, the result are not to say it's the worse. It is still representative to some sense, just weaker learners.

In these branches above that I discusses, they uses transfer learning from efficientnet, taken from pretrained EfficientNet for PyTorch, particularly tried b0 and b1 version. For b0, we try to use fine-tuning, just training the final layer, and still they did not work so well. Because the training time on CPU is also very long for this, we transfer the data to Azure and use Azure ML services with their GPUs. Setting up for it to work, plus handling our own errors and finding the optimized way to prepare the environment (i.e. after sending 24 jobs and failing 24 times consecutively), takes up one whole day and by then we already are 3 days away from deadline. So not much experimentation have been done on this. This is reflected in tl_azure branch.

We also tried to just use the model.extract_features() method to extract features and train on the features with purely Linear layers, also didn't do too good. Particularly, the important thing here is for the optimizer we use the model as the weights (the efficientnet weights) rather than the training model weights, because trying both results in using efficientnet weights for optimizer gets better results. The reason remains unknown as one is not an expert in understanding how optimizer works mathematically. This is reflected in the extract_learning branch.

Some best results included gets up to 8200+ points, but nothing too good. Perhaps you might want to try other way of resizing, or other methods, the model might learn something, but it is unsure whether the learned stuffs would have meaning.

That concludes our brief walkthrough of the branches. Hope you have a nice time reading through and have a nice day. Our highest point achieved is 9864, ranked 8 on the leaderboard.

P.S. If any questions, you could start an "Discussion" on the Discussion page, but we cannot assure you that we will reply. Thank you.