-

-

-

-

-

-

-

- -

-

-

-

-

-

-

-

-

- - - - - - - Usage - - - - - - - - - - - - - - - -

- - - - - - - Models - - - - - - - - - - - -

- - - - - - - Encoders - - - - - - -

-

-

-

-

-

-

-

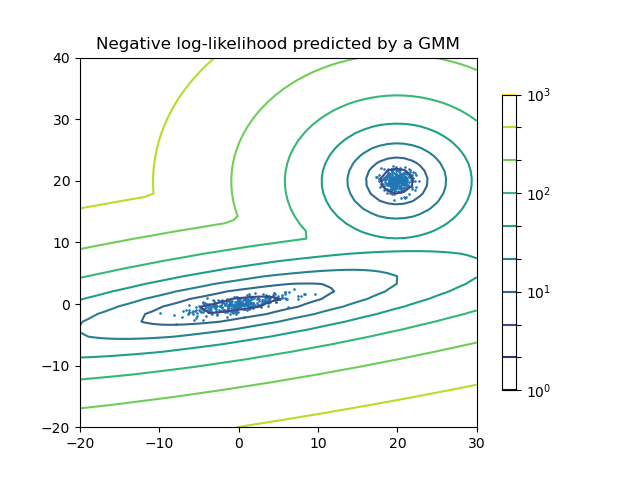

- GMM is a generative probabilistic model over the contextual embeddings. -The model assumes that contextual embeddings are generated from a mixture of underlying Gaussian components. -These Gaussian components are assumed to be the topics.

- -

- GMM assumes that the embeddings are generated according to the following stochastic process:

-Priors are optionally imposed on the model parameters. -The model is fitted either using expectation maximization or variational inference.

-After the model is fitted, soft topic labels are inferred for each document. -A document-topic-matrix (\(T\)) is built from the likelihoods of each component given the document encodings.

-Or in other words for document \(i\) and topic \(z\) the matrix entry will be: \(T_{iz} = p(\rho_i|\mu_z, \Sigma_z)\)

-Term importances for the discovered Gaussian components are estimated post-hoc using a technique called Soft c-TF-IDF, -an extension of c-TF-IDF, that can be used with continuous labels.

-Let \(X\) be the document term matrix where each element (\(X_{ij}\)) corresponds with the number of times word \(j\) occurs in a document \(i\). -Soft Class-based tf-idf scores for terms in a topic are then calculated in the following manner:

-GMM is also capable of dynamic topic modeling. This happens by fitting one underlying mixture model over the entire corpus, as we expect that there is only one semantic model generating the documents. -To gain temporal representations for topics, the corpus is divided into equal, or arbitrarily chosen time slices, and then term importances are estimated using Soft-c-TF-IDF for each of the time slices separately.

-Gaussian Mixtures can in some sense be considered a fuzzy clustering model.

-Since we assume the existence of a ground truth label for each document, the model technically cannot capture multiple topics in a document, -only uncertainty around the topic label.

-This makes GMM better at accounting for documents which are the intersection of two or more semantically close topics.

-Another important distinction is that clustering topic models are typically transductive, while GMM is inductive. -This means that in the case of GMM we are inferring some underlying semantic structure, from which the different documents are generated, -instead of just describing the corpus at hand. -In practical terms this means that GMM can, by default infer topic labels for documents, while (some) clustering models cannot.

-GMM can be a bit tedious to run at scale. This is due to the fact, that the dimensionality of parameter space increases drastically with the number of mixture components, and with embedding dimensionality. -To counteract this issue, you can use dimensionality reduction. We recommend that you use PCA, as it is a linear and interpretable method, and it can function efficiently at scale.

---Through experimentation on the 20Newsgroups dataset I found that with 20 mixture components and embeddings from the

-all-MiniLM-L6-v2embedding model - reducing the dimensionality of the embeddings to 20 with PCA resulted in no performance decrease, but ran multiple times faster. - Needless to say this difference increases with the number of topics, embedding and corpus size.

from turftopic import GMM

-from sklearn.decomposition import PCA

-

-model = GMM(20, dimensionality_reduction=PCA(20))

-

-# for very large corpora you can also use Incremental PCA with minibatches

-

-from sklearn.decomposition import IncrementalPCA

-

-model = GMM(20, dimensionality_reduction=IncrementalPCA(20))

-turftopic.models.gmm.GMM

-

-

-

- Bases: ContextualModel, DynamicTopicModel

Multivariate Gaussian Mixture Model over document embeddings. -Models topics as mixture components.

-from turftopic import GMM

-

-corpus: list[str] = ["some text", "more text", ...]

-

-model = GMM(10, weight_prior="dirichlet_process").fit(corpus)

-model.print_topics()

-Parameters:

-| Name | -Type | -Description | -Default | -

|---|---|---|---|

n_components |

-

- int

- |

-

-

-

- Number of topics. If you're using priors on the weight, -feel free to overshoot with this value. - |

- - required - | -

encoder |

-

- Union[Encoder, str]

- |

-

-

-

- Model to encode documents/terms, all-MiniLM-L6-v2 is the default. - |

-

- 'sentence-transformers/all-MiniLM-L6-v2'

- |

-

vectorizer |

-

- Optional[CountVectorizer]

- |

-

-

-

- Vectorizer used for term extraction. -Can be used to prune or filter the vocabulary. - |

-

- None

- |

-

weight_prior |

-

- Literal['dirichlet', 'dirichlet_process', None]

- |

-

-

-

- Prior to impose on component weights, if None, -maximum likelihood is optimized with expectation maximization, -otherwise variational inference is used. - |

-

- None

- |

-

gamma |

-

- Optional[float]

- |

-

-

-

- Concentration parameter of the symmetric prior. -By default 1/n_components is used. -Ignored when weight_prior is None. - |

-

- None

- |

-

dimensionality_reduction |

-

- Optional[TransformerMixin]

- |

-

-

-

- Optional dimensionality reduction step before GMM is run. -This is recommended for very large datasets with high dimensionality, -as the number of parameters grows vast in the model otherwise. -We recommend using PCA, as it is a linear solution, and will likely -result in Gaussian components. -For even larger datasets you can use IncrementalPCA to reduce -memory load. - |

-

- None

- |

-

Attributes:

-| Name | -Type | -Description | -

|---|---|---|

weights_ |

-

- ndarray of shape (n_components)

- |

-

-

-

- Weights of the different mixture components. - |

-

turftopic/models/gmm.py18 - 19 - 20 - 21 - 22 - 23 - 24 - 25 - 26 - 27 - 28 - 29 - 30 - 31 - 32 - 33 - 34 - 35 - 36 - 37 - 38 - 39 - 40 - 41 - 42 - 43 - 44 - 45 - 46 - 47 - 48 - 49 - 50 - 51 - 52 - 53 - 54 - 55 - 56 - 57 - 58 - 59 - 60 - 61 - 62 - 63 - 64 - 65 - 66 - 67 - 68 - 69 - 70 - 71 - 72 - 73 - 74 - 75 - 76 - 77 - 78 - 79 - 80 - 81 - 82 - 83 - 84 - 85 - 86 - 87 - 88 - 89 - 90 - 91 - 92 - 93 - 94 - 95 - 96 - 97 - 98 - 99 -100 -101 -102 -103 -104 -105 -106 -107 -108 -109 -110 -111 -112 -113 -114 -115 -116 -117 -118 -119 -120 -121 -122 -123 -124 -125 -126 -127 -128 -129 -130 -131 -132 -133 -134 -135 -136 -137 -138 -139 -140 -141 -142 -143 -144 -145 -146 -147 -148 -149 -150 -151 -152 -153 -154 -155 -156 -157 -158 -159 -160 -161 -162 -163 -164 -165 -166 -167 -168 -169 -170 -171 -172 -173 -174 -175 -176 -177 -178 -179 -180 -181 | |

transform(raw_documents, embeddings=None)

-

-Infers topic importances for new documents based on a fitted model.

- - - -Parameters:

-| Name | -Type | -Description | -Default | -

|---|---|---|---|

raw_documents |

- - | -

-

-

- Documents to fit the model on. - |

- - required - | -

embeddings |

-

- Optional[ndarray]

- |

-

-

-

- Precomputed document encodings. - |

-

- None

- |

-

Returns:

-| Type | -Description | -

|---|---|

- ndarray of shape (n_dimensions, n_topics)

- |

-

-

-

- Document-topic matrix. - |

-

turftopic/models/gmm.py133 -134 -135 -136 -137 -138 -139 -140 -141 -142 -143 -144 -145 -146 -147 -148 -149 -150 -151 -152 | |

-

-

-

-

-

-

-

- KeyNMF is a topic model that relies on contextually sensitive embeddings for keyword retrieval and term importance estimation, -while taking inspiration from classical matrix-decomposition approaches for extracting topics.

- -

- The first step of the process is gaining enhanced representations of documents by using contextual embeddings. -Both the documents and the vocabulary get encoded with the same sentence encoder.

-Keywords are assigned to each document based on the cosine similarity of the document embedding to the embedded words in the document. -Only the top K words with positive cosine similarity to the document are kept.

-These keywords are then arranged into a document-term importance matrix where each column represents a keyword that was encountered in at least one document, -and each row is a document. -The entries in the matrix are the cosine similarities of the given keyword to the document in semantic space.

-Topics in this matrix are then discovered using Non-negative Matrix Factorization. -Essentially the model tries to discover underlying dimensions/factors along which most of the variance in term importance -can be explained.

-turftopic.models.keynmf.KeyNMF

-

-

-

- Bases: ContextualModel

Extracts keywords from documents based on semantic similarity of -term encodings to document encodings. -Topics are then extracted with non-negative matrix factorization from -keywords' proximity to documents.

-from turftopic import KeyNMF

-

-corpus: list[str] = ["some text", "more text", ...]

-

-model = KeyNMF(10, top_n=10).fit(corpus)

-model.print_topics()

-Parameters:

-| Name | -Type | -Description | -Default | -

|---|---|---|---|

n_components |

-

- int

- |

-

-

-

- Number of topics. - |

- - required - | -

encoder |

-

- Union[Encoder, str]

- |

-

-

-

- Model to encode documents/terms, all-MiniLM-L6-v2 is the default. - |

-

- 'sentence-transformers/all-MiniLM-L6-v2'

- |

-

vectorizer |

-

- Optional[CountVectorizer]

- |

-

-

-

- Vectorizer used for term extraction. -Can be used to prune or filter the vocabulary. - |

-

- None

- |

-

top_n |

-

- int

- |

-

-

-

- Number of keywords to extract for each document. - |

-

- 25

- |

-

turftopic/models/keynmf.py51 - 52 - 53 - 54 - 55 - 56 - 57 - 58 - 59 - 60 - 61 - 62 - 63 - 64 - 65 - 66 - 67 - 68 - 69 - 70 - 71 - 72 - 73 - 74 - 75 - 76 - 77 - 78 - 79 - 80 - 81 - 82 - 83 - 84 - 85 - 86 - 87 - 88 - 89 - 90 - 91 - 92 - 93 - 94 - 95 - 96 - 97 - 98 - 99 -100 -101 -102 -103 -104 -105 -106 -107 -108 -109 -110 -111 -112 -113 -114 -115 -116 -117 -118 -119 -120 -121 -122 -123 -124 -125 -126 -127 -128 -129 -130 -131 -132 -133 -134 -135 -136 -137 -138 -139 -140 -141 -142 -143 -144 -145 -146 -147 -148 -149 -150 -151 -152 -153 -154 -155 -156 -157 -158 -159 -160 -161 -162 -163 -164 -165 -166 -167 -168 -169 -170 -171 -172 -173 -174 -175 -176 -177 -178 -179 -180 -181 -182 -183 -184 -185 -186 -187 -188 -189 -190 -191 -192 -193 -194 -195 -196 -197 -198 -199 -200 -201 -202 -203 -204 -205 -206 -207 -208 -209 -210 -211 -212 -213 -214 -215 -216 -217 -218 -219 -220 -221 -222 -223 -224 -225 -226 -227 -228 -229 -230 -231 -232 -233 -234 -235 -236 -237 -238 -239 -240 -241 -242 -243 -244 -245 -246 -247 -248 -249 -250 -251 -252 -253 -254 -255 -256 -257 -258 -259 -260 -261 -262 -263 -264 -265 -266 -267 -268 -269 -270 -271 -272 -273 -274 -275 -276 -277 -278 -279 -280 -281 -282 -283 -284 -285 -286 | |

big_fit(document_stream, keyword_file='./__keywords.jsonl', batch_size=500, max_epochs=100)

-

-Fit KeyNMF on very large datasets, that cannot fit in memory. -Internally uses minibatch NMF. -The stream of documents has to be a reusable iterable, -as multiple passes are needed over the corpus to -learn the vocabulary and then fit the model.

- -turftopic/models/keynmf.py187 -188 -189 -190 -191 -192 -193 -194 -195 -196 -197 -198 -199 -200 -201 -202 -203 -204 -205 -206 -207 -208 -209 -210 -211 -212 | |

transform(raw_documents, embeddings=None)

-

-Infers topic importances for new documents based on a fitted model.

- - - -Parameters:

-| Name | -Type | -Description | -Default | -

|---|---|---|---|

raw_documents |

- - | -

-

-

- Documents to fit the model on. - |

- - required - | -

embeddings |

-

- Optional[ndarray]

- |

-

-

-

- Precomputed document encodings. - |

-

- None

- |

-

Returns:

-| Type | -Description | -

|---|---|

- ndarray of shape (n_dimensions, n_topics)

- |

-

-

-

- Document-topic matrix. - |

-

turftopic/models/keynmf.py223 -224 -225 -226 -227 -228 -229 -230 -231 -232 -233 -234 -235 -236 -237 -238 -239 -240 -241 -242 -243 -244 -245 | |