Welcome to the Azure Developer Portal (ADP) repository. The portal is built using Backstage.

+make and build-essential packages installedcurl or wget installedSee the Backstage Getting Started documentation for the full list of prerequisites.

+The portal is integrated with various 3rd party services. Connections to these services are managed through the environment variables below:

+Development can be done within a devcontainer if you wish. Once the devcontainer is set up, simply fill out the .env file at the root and rebuild the container. Once rebuilt, you will need to log into the az cli to the tenant you wish to connect to using az login --tenant <TenantId>

If you are using VSCode, the steps are as follows:

+Dev Containers: Clone Repository in Container Volume commandhttps://github.com/DEFRA/adp-portal.git.env file at the root, and fill it out with the variables described belowDev Containers: Rebuild Container commandaz login --tenant <YOUR_TENANT_ID>yarn devTo sign commits using GPG from within the devcontainer, please follow the steps here

+The application requires the following environment variables to be set. We recommend creating a .env file in the root of your repo (this is ignored by Git) and pasting the variables in to this file. This file will be used whenever you run a script through yarn such as yarn dev.

To convert a GitHub private key into a format that can be used in the GITHUB_PRIVATE_KEY environment variable use one of the following scripts:

Powershell

+Shell

+A hybrid strategy is implemented for techdocs which means documentation can be generated on the fly by out of the box generator or using an external pipeline. +All generated documentation are stored in Azure blob storage.

+For more info please refer : Ref

+Run the following commands from the root of the repository:

+ +If you want to override any settings in ./app-config.yaml, create a local configuration file named app-config.local.yaml and define your overrides here.

You need to have the azure cli installed and the azure development client installed

+Login into both az, and azd before running the server.

+ +You must run the application in the same browser session, that the authentication ran in. If you use a "private window", new session, it will not have access to the required cookies to complete authentication, and you will get a 'user not found' error message

+If you have an idea for a new feature or an improvement to an existing feature, please follow these steps:

+If you're ready to submit your code changes, please follow these steps specified in the pull_request_template

+To maintain a consistent code style throughout the project, please adhere to the following guidelines:

+Include information about the project's license and any relevant copyright notices.

+ + + + + + + + + + + + + +A Helm chart library that captures general configuration for Azure Service Operator (ASO) resources. It can be used by any microservice Helm chart to import AzureServiceOperator K8s object templates configured to run on the ADP platform.

+In your microservice Helm chart:

+ * Update Chart.yaml to apiVersion: v2.

+ * Add the library chart under dependencies and choose the version you want (example below). Version number can include ~ or ^ to pick up latest PATCH and MINOR versions respectively.

+ * Issue the following commands to add the repo that contains the library chart, update the repo, then update dependencies in your Helm chart:

An example Demo microservice Chart.yaml:

NOTE: We will use ACR where ASO Helm Library Chart can be published. So above dependencies will be changed to import library from ACR (In Progress).

+First, follow the instructions for including the ASO Helm library chart.

+The ASO Helm library chart has been configured using the conventions described in the Helm library chart documentation. The K8s object templates provide settings shared by all objects of that type, which can be augmented with extra settings from the parent (Demo microservice) chart. The library object templates will merge the library and parent templates. In the case where settings are defined in both the library and parent chart, the parent chart settings will take precedence, so library chart settings can be overridden. The library object templates will expect values to be set in the parent .values.yaml. Any required values (defined for each template below) that are not provided will result in an error message when processing the template (helm install, helm upgrade, helm template).

The general strategy for using one of the library templates in the parent microservice Helm chart is to create a template for the K8s object formateted as so:

+All the K8s object templates in the library require the following values to be set in the parent microservice Helm chart's values.yaml:

The below values are used by the ASO templates internally, and their values are set using platform variables in adp-flux-services repository.

for e.g. NameSpace Queues will get created inside serviceBusNamespaceName namespace and postgres database will get created inside postgresServerName server.

Whilst the Platform orchestration will manage the 'platform' level variables, they can be optionally supplied in some circumstances. Examples include in sandpit/development when testing against team-specific infrastructure (that isn't Platform shared). So, if you have a dedicated Service Bus or Database Server instance, you can point to those to ensure you apps works as expected. Otherwise, don't supply the Platform level variables as these will be automatically managed and orchestrated throughout all the environments appropriately against core shared infrastructure. You (as a Platform Tenant) just supply your team-specific/instance specific infrastructure config' (i.e. Queues, Storage Accounts, Databases).

+_namespace-queue.yamladp-aso-helm-library.namespace-queueAn ASO NamespacesQueue object to create a Microsoft.ServiceBus/namespaces/queues resource.

A basic usage of this object template would involve the creation of templates/namespace-queue.yaml in the parent Helm chart (e.g. adp-microservice) containing:

The following values need to be set in the parent chart's values.yaml in addition to the globally required values listed above.

Note that namespaceQueues is an array of objects that can be used to create more than one queue.

Please note that the queue name is prefixed with the namespace internally.

+For example, if the namespace name is "adp-demo" and you have provided the queue name as "queue1", then in the service bus, it creates a queue with the adp-demo-queue1 name.

The following values can optionally be set in the parent chart's values.yaml to set the other properties for servicebus queues:

owner property is used to control the ownership of the queue. The default value is yes and you don't need to provide it if you are creating and owning the queue.

+If you are creating only role assignments for the queue you do not own, then you should explicitly set the owner flag to no so that it will only create the role assignments on the existing queue.

This template also optionally allows you to create Role Assignments by providing roleAssignments properties in the namespaceQueues object.

Below are the minimum values that are required to be set in the parent chart's values.yaml to create a roleAssignments.

If you are creating only role assignments for the queue you do not own, then you should explicitly set the owner flag to no so that it will only create the role assignments on the existing queue.

The following section provides usage examples for the Namespace Queues template.

+claim queue. Note that owner is set to no.¶_namespace-topic.yamladp-aso-helm-library.namespace-topicAn ASO NamespacesTopic object to create a Microsoft.ServiceBus/namespaces/topics resource.

A basic usage of this object template would involve the creation of templates/namespace-topic.yaml in the parent Helm chart (e.g. adp-microservice) containing:

The following values need to be set in the parent chart's values.yaml in addition to the globally required values listed above.

Note that namespaceTopics is an array of objects that can be used to create more than one topic.

Please note that the topic name is prefixed with the namespace internally.

+For example, if the namespace name is "adp-demo" and you have provided the topic name as "topic1," then in the service bus, it creates a topic with the "adp-demo-topic1" name.

The following values can optionally be set in the parent chart's values.yaml to set the other properties for namespaceTopics:

owner property is used to control the ownership of the topic. The default value is yes and you don't need to provide it if you are creating and owning the topic.

+If you are only creating role assignments for the topic you do not own, then you should explicitly set the owner flag to no so that it will only create the role assignments on the existing topic.

This template also optionally allows you to create Role Assignments by providing roleAssignments properties in the namespaceTopics object.

Below are the minimum values that are required to be set in the parent chart's values.yaml to create a roleAssignments.

If you are creating only role assignments for the Topic you do not own, then you should explicitly set the owner flag to no so that it will only create the role assignments on the existing Topic (See Example 2 in Usage examples section).

This template also optionally allows you to create Topic Subscriptions and Topic Subscriptions Rules for a given topic by providing Subscriptions and SubscriptionRules properties in the topic object.

Below are the minimum values that are required to be set in the parent chart's values.yaml to create a NamespacesTopic, NamespacesTopicsSubscription and NamespacesTopicsSubscriptionsRule

To create topicSubscriptions inside already existing topics, set the property owner to no. By default owner is set to yes which creates the topic name defined in values (See Example 4 in Usage examples section).

topicSubscriptions¶The following values can optionally be set in the parent chart's values.yaml to set the other properties for topicSubscriptions:

topicSubscriptionRules¶The following values can optionally be set in the parent chart's values.yaml to set the other properties for topicSubscriptionRules:

The following section provides usage examples for the Namespace Topic template.

+claim-notify Topic. Note that owner is set to no.¶_flexible-servers-db.yamladp-aso-helm-library.flexible-servers-dbAn ASO FlexibleServersDatabase object.

A basic usage of this object template would involve the creation of templates/flexible-servers-db.yaml in the parent Helm chart (e.g. adp-microservice) containing:

The following values need to be set in the parent chart's values.yaml in addition to the globally required values listed above:

+

namespace internally. For example, if the namespace name is "adp-microservice" and you have provided the DB name as "demo-db," then in the postgres server, it creates a database with the name "adp-microservice-demo-db".

+The following section provides usage examples for the Flexible-Servers-Db template.

+payment database¶_userassignedidentity.yamladp-aso-helm-library.userassignedidentityAn ASO UserAssignedIdentity object to create a Microsoft.ManagedIdentity/userAssignedIdentities resource.

A basic usage of this object template would involve the creation of templates/userassignedidentity.yaml in the parent Helm chart (e.g. adp-microservice) containing:

This template uses the below values, whose values are set using platform variables in the adp-flux-services repository as a part of the service's ASO helmrelease value configuration, and you don't need to set them explicitly in the values.yaml file.

UserAssignedIdentity Name is derived internally, and it is set to = {TEAM_MI_PREFIX}-{SERVICE_NAME}

For e.g. In SND1 if the TEAM_MI_PREFIX value is set to "sndadpinfmid1401" and SERVICE_NAME value is set to "adp-demo-service", then UserAssignedIdentity value will be : "sndadpinfmid1401-adp-demo-service".

The following values can optionally be set in the parent chart's values.yaml to set the other properties for servicebus queues:

This template also optionally allows you to create Federated credentials for a given User Assigned Identity by providing federatedCreds properties in the userAssignedIdentity object.

Below are the minimum values that are required to be set in the parent chart's values.yaml to create a userAssignedIdentity and federatedCreds.

The following section provides usage examples for the UserAssignedIdentity template.

+_storage-account.yamladp-aso-helm-library.storage-account.yaml++Version 2.0.0 and above

+Starting from version 2.0.1, the Storage Account has been enhanced with role assignments. These data role assignments are now scoped at the storage account level, introducing two new data roles: DataWriter and DataReader.

+The DataWriter role grants applications the ability to both read and write data in the blob container, tables, and files. Conversely, the DataReader role provides applications with read-only access to data in the blob container, tables, and files.

+

An ASO StorageAccount object to create a Microsoft.Storage/storageAccounts resource and optionally sub resources Blob Containers and Tables.

By default, private endpoints are always enabled on storage accounts and By default, private endpoints are always enabled on storage accounts and publicNetworkAccess is disabled. Optionally, you can also configure ipRules in scenarios where you want to limit access to your storage account to requests originating from specified IP addresses. |

+

|---|

| + |

Please be aware that this template only includes A records in the central DNS zone for the Dev, Tst, Pre, and Prd environments. For Sandpit environments snd1, snd2, and snd3, it currently only generates a private endpoint without adding an A record to the DNS zone. You will need to separately add this entry via PowerShell script. Please be aware that this template only includes A records in the central DNS zone for the Dev, Tst, Pre, and Prd environments. For Sandpit environments snd1, snd2, and snd3, it currently only generates a private endpoint without adding an A record to the DNS zone. You will need to separately add this entry via PowerShell script. |

+

|---|

| + |

With this template, you can create the below resources. + - Storage Accounts + - Blob containers and RoleAssignments + - Tables and RoleAssignments

+A basic usage of this object template would involve the creation of templates/storage-account.yaml in the parent Helm chart (e.g. adp-microservice) containing:

Below are the default values used by the the storage account template internally, and they cannot be overridden by the user from the values.yaml file.

The following values need to be set in the parent chart's values.yaml in addition to the globally required values listed above.

Note that storageAccounts is an array of objects that can be used to create more than one Storage Accounts.

Please note that the storage account name must be unique across Azure.

+storage account name is internally prefixed with the storageAccountPrefix.

+For instance, in the Dev environment, the storageAccountPrefix is configured as devadpinfst2401. If you input "claim" as the storage account name, the final storage account name will be devadpinfst2401claim.

The following values need to be set in the parent chart's values.yaml in addition to the globally required values listed above.

The following values can optionally be set in the parent chart's values.yaml to set the other properties for storageAccounts:

For detailed description of each property see here

+owner property is used to control the ownership of the storage account. The default value is yes and you don't need to provide it if you are creating and owning the storage account.

+If you are creating Blob containers or Tables on the existing storage account that you do not own, then you should explicitly set the owner flag to no so that it will only create Blob containers or Tables on the existing storage account.

The following section provides usage examples for the storage account template.

+The table below shows the Azure Service Operator (ASO) resource naming convention in Azure and Kubernetes:

+In the example below, the following platform values are used for demonstration purposes: +- namespace = 'ffc-demo' +- serviceName = 'ffc-demo-web' +- teamMIPrefix = 'sndadpinfmi1401' +- storageAccountPrefix = 'sndadpinfst1401' +- privateEndpointPrefix = 'sndadpinfpe1401' +- postgresServerName = 'sndadpdbsps1401' +- userassignedidentityName = 'sndadpinfmi1401-ffc-demo-web'

+And the following user input values are used for demonstration purposes:

+| Resource Type | +Resource Name Format in Azure |

+Resource Name Example in Azure |

+Resource Name Format in Kubernetes |

+Resource Name Example in Kubernetes |

+

|---|---|---|---|---|

| NamespacesQueue | +{namespace}-{QueueName} | +ffc-demo-queue01 | +{namespace}-{QueueName} | +ffc-demo-queue01 | +

| Queue RoleAssignment | +NA | +NA | +{userassignedidentityName}-{QueueName}-{RoleName}-rbac-{index} | +sndadpinfmi1401-ffc-demo-web-ffc-demo-queue01-queuereceiver-rbac-0 | +

| NamespacesTopic | +{namespace}-{TopicName} | +ffc-demo-topic01 | +{namespace}-{TopicName} | +ffc-demo-topic01 | +

| NamespacesTopicsSubscription | +{namespace}-{TopicSubName} | +ffc-demo-topicSub01 | +{namespace}-{TopicName}-{TopicSubName}-subscription | +ffc-demo-topic01-topicsub01-subscription | +

| Topic RoleAssignment | +NA | +NA | +{userassignedidentityName}-{TopicName}-{RoleName}-rbac-{index} | +sndadpinfmi1401-ffc-demo-web-ffc-demo-topic01-topicreceiver-rbac-0 | +

| Postgres Database | +{namespace}-{DatabaseName} | +ffc-demo-claim | +{postgresServerName}-{namespace}-{DatabaseName} | +sndadpdbsps1401-ffc-demo-claim | +

| Manage Idenitty | +{teamMIPrefix}-{serviceName} | +sndadpinfmi1401-ffc-demo-web | +{teamMIPrefix}-{serviceName} | +sndadpinfmi1401-ffc-demo-web | +

| StorageAccount | +{storageAccountPrefix}{StorageAccountName} | +sndadpinfst1401demo | +{serviceName}-{StorageAccountName} | +ffc-demo-web-sndadpinfst1401demo | +

| StorageAccountsBlobService | +default | +default | +{serviceName}-{StorageAccountName}-default | +ffc-demo-web-sndadpinfst1401demo-default | +

| StorageAccountsBlobServicesContainer | +{ContainerName} | +container-01 | +{serviceName}-{StorageAccountName}-default-{ContainerName} | +ffc-demo-web-sndadpinfst1401demo-default-container-01 | +

| StorageAccountsTableServicesTable | +{TableName} | +table01 | +{serviceName}-{StorageAccountName}-default-{TableName} | +ffc-demo-web-sndadpinfst1401demo-default-table01 | +

| PrivateEndpoint | +{privateEndpointPrefix}-{ResourceName}-{SubResource} | +sndadpinfpe1401-sndadpinfst1401demo-blob | +{privateEndpointPrefix}-{ResourceName}-{SubResource} | +sndadpinfpe1401-sndadpinfst1401demo-blob | +

| PrivateEndpointsPrivateDnsZoneGroup | +default | +default | +{PrivateEndpointName}-default | +sndadpinfpe1401-sndadpinfst1401demo-blob-default | +

In addition to the K8s object templates described above, a number of helper templates are defined in _helpers.tpl that are both used within the library chart and available to use within a consuming parent chart.

adp-aso-helm-library.default-check-required-msg{{- include "adp-aso-helm-library.default-check-required-msg" . }}A template defining the default message to print when checking for a required value within the library. This is not designed to be used outside of the library.

+adp-aso-helm-library.commonTags{{- include "adp-aso-helm-library.commonTags" $ | nindent 4 }} ($ is mapped to the root scope)Common tags to apply to tags of all ASO resource objects on the ADP K8s platform. This template relies on the globally required values listed above.

1 | |

For the Azure Service Operator to not delete the resources created in Azure on the deletion of the kubernetes resource manifest files, the below section can be added to Values.yaml in the parent helm chart.

This specifies the reconcile policy to be used and can be set to manage, skip or detach-on-delete. More info over here.

THIS INFORMATION IS LICENSED UNDER THE CONDITIONS OF THE OPEN GOVERNMENT LICENCE found at:

+http://www.nationalarchives.gov.uk/doc/open-government-licence/version/3

+The following attribution statement MUST be cited in your products and applications when using this information.

+++Contains public sector information licensed under the Open Government license v3

+

The Open Government Licence (OGL) was developed by the Controller of Her Majesty's Stationery Office (HMSO) to enable information providers in the public sector to license the use and re-use of their information under a common open licence.

+It is designed to encourage use and re-use of information freely and flexibly, with only a few conditions.

+ + + + + + + + + + + + + +We have now implemented an abstraction layer within the adp-helm-library that allows the dynamic allocation of memory and CPU resources based on the memory and cpu tier provided in the values.yaml file.

+The new memory and cpu tier values are in the below table:

+| TIER | +CPU-REQUEST | +CPU-LIMIT | +MEMORY-REQUEST | +MEMORY-LIMIT | +

|---|---|---|---|---|

| S | +50m | +50m | +50Mi | +50Mi | +

| M | +100m | +100m | +100Mi | +100Mi | +

| L | +150m | +150m | +150Mi | +150Mi | +

| XL | +200m | +200m | +200Mi | +200Mi | +

| XXL | +300m | +600m | +300Mi | +600Mi | +

| CUSTOM | +<?> | +<?> | +<?> | +<?> | +

Instructions

+The following values can optionally be set in a values.yaml to select the required CPU and Memory for a container:

example 1 - select an Extra Large (XL) tier:

+ +example 2 - select an Small (S) tier:

+ +example 3 - select custom values and provide your own values if the TIER sizes don't fit your requirements.

+example 4 - The default is Medium (M). If this works for you then you don't need to pass a memCpuTier.

+NOTE: +If you do not add a 'memCpuTier' then the Tier will default to 'M'

+NOTE: +You can also choose CUSTOM and provide your own values if the TIER sizes don't fit your requirements. +If you choose CUSTOM, requestMemory, requestCpu, limitMemory and limitCpu are required.

+IMPORTANT: +Your team namespace will be given a fixed amount of resources via ResourceQuotas. +Once your cumulative resource request total passes the assigned quota on your namespace, all further deployments will be unsuccessful. If you require an increase to your ResourceQuota, you will need to raise a request via the ADP team. It's important you monitor the performance of your application and adjust pod requests and limits accordingly. Please choose the appropriate cpu and memory tier for your application or provide custom values for your CPU and Memory requests and limits.

+References

+https://learn.microsoft.com/en-us/azure/aks/developer-best-practices-resource-management

+ + + + + + + + + + + + + + + + + +This document outlines the QA approach for the Azure Developer Platform (APD). The objective of the quality assurance is to ensure that all business applications developed and hosted on the ADP meet DEFRA's standards of quality, reliability and performance.

+The Quality Assurance approach follows the traditional QA Pyramid that modes how software testing is categorised and layered.

+

Below are the tools that are currently supported on the ADP

+| Type of Test | +Tooling | +

|---|---|

| Unit Testing | +"C# NUnit/ xUnit, Nsubstitute: NodeJS: Jest |

+

| Functional/Acceptance | +WebDriver.IO | +

| Security Testing | +"OWASP ZAP (Zed Attack Proxy) | +

| API Testing (Contract Testing) | +PACT Broker | +

| Accessibility Testing | +AXE Lighthouse? |

+

| Performance Testing | +JMeter, BrowserStack. Azure Load Testing is under consideration |

+

| Exploratory Testing (Manual) | +ADO Test Plans | +

Development teams use the ADP Portal to scaffold a new service using one of the exemplar software templates (refer to How to create a platform service). Based on the template type (frontend or backend), basic tests will be included that the teams can build on as they add more functionality to the service.

+The ADP Platform provides the ability to execute the above tests. These tests are executed as post deployment tests. The pipeline will check for the existence of specific docker-compose test files to determine if it can run tests. Refer to the how-to-guides for the different types of tests.

+++However, it is the responsibility of the delivery projects to ensure that the business services they are delivering have written sufficient tests for the different types of tests that meet DEFRA's standards.

+

The supported programming frameworks are .NET/C# and NodeJS/Javascript.

+The unit tests are executed in the CI Build Pipeline. SonarQube analysis has been integrated in the ADP Core Pipeline Teplate to ensure the code conforms to the DEFRA quality standards.

+Links to the SonarCloud analysis, Synk Analysis will available in the component page of the service in the ADP Portal.

+These end-to-end tests for internal (via Open VPN) or public endpoints for frontends and APIs.

+Refer to the Guide on how to create an Acceptance Test

+These tests should be executed against internal (via Open VPN) or public endpoints for frontends and APIs. Docker is used with BrowserStack to execute the peformance tests.

+As a pre-requisite, Non Functional Requirements should be defined by the delivery project to set the baseline for the expected behavior e.g. expected average API response time, page load duration.

+There are various types of performance tests.

+Refer to the Guide on how to create a Performance Test

+These tests verify that the all DEFRA public websites/business services are in compliance with WCAG 2.2 AA accessibility standard

+Refer to the guidiance on Understanding accessibility requirements for public sector bodies

+SonarQube Security Testing has been incorporated into the CI Build Pipeline. In addition to that, OWASP ZAP is executed as per of the post deployment tests.

+ + + + + + + + + + + + + + + + +ADP Services Secret Management

+The secrets in ADP services are managed using Azure Key Vault. The secretes for each individual services are imported to the Azure Key Vault through ADO Pipeline tasks and are accessed by the services by using the application configuration YAML files. The importing of secretes to the Key Vault and referencing them by individual services are automated using ADO Pipelines. The detailed workflow for secret management includes the following steps:

+1. Configure ADO Library

+Create variable groups for each environments of the service in ADO Library.

+Naming Convection: It follows the following convention for creating the variable groups for a service.

+1 | |

Example: The variable groups for different environment of a service are shown below.

+

Add the variables and the values for the secretes in each of the variable groups.

+Variable Naming Convection: It follows the following convention for creating the variables in variable groups.

+1 | |

Example: Secrete variables for a service are shown below.

+

2. ADO Pipeline - Import secrets to Key Vault

+The variables and values from the variable groups are automated to import to the Azure Key Vault using +Pipeline task and Power Shell scripts.

+Example: The code snippet involved in importing the secrets to the Azure Key Vault is + shown below.

+

3. Azure Key Vault - Imported secretes

+After the secretes are added to the ADO Library variable groups and the service CI pipeline run successfully would import the secrets to the Key Vault as shown below.

+Example: Secretes imported to the Key Vault for a service are shown below

+

4. App Config

+Access the secrets from the Key Vault through appConfig YAML files included in each of the services. +There are two different kinds of appConfig files.

+Environment specific appConfig file: Each service has it own environment specific appConfig file to access it + respective secrets from the Key Vault.

+File Naming Convection:

+1 | |

Example: The appConfig files for different environments for a service are shown below.

+

The type of the variable (key) that reference the secretes form the Key Vault should be defined as type: "keyvault" in the config YAML file.

+4. ADO Pipeline - Import App Config

+The Pipeline tasks shown below use the environment specific appConfig YAML files to import the secrets from Azure Key Vault to the service.

+

5. Run Pipeline - appConfig only

+The secretes can be added to the Key Vault and also referenced by the service using the appConfig files. This can be achieved by running the pipeline on selecting the Deploy App Config check box. This helps in running only the secrete management tasks instated of running all the tasks in the pipeline. This is useful when updating the secretes of a service.

+

The Azure Development Platform Portal built using Backstage.

+The Portal enables users to self service by providing the functionality below.

+Include instructions for getting the project set up locally. This may include steps to install dependencies, configure the development environment, and run the project.

+ + + + + + + + + + + + + + +ADP enables authorised users to self-service through the platform, allowing them to create and manage required arms-length bodies, delivery programmes, and delivery teams. The data added can subsequently be edited by those authorised users and viewed by all.

+The diagram below illustrates the high-level process flow of user journeys, distinguishing between four types of users: ADP Admins, Programme Managers, Project Managers, and Project Developers. ADP Admins have the authority to create new ALBs (Arms-Length Bodies) and initially seed Programme Managers. Programme Managers are able to onboard additional Programme and Project Managers, as well as to create Delivery Programmes and Projects. Project Managers have the capability to create new Delivery Projects and onboard Delivery Project Members. Finally, Project Developers are tasked with creating and managing platform services.

+

In the table below you can see the permissions per ADP Persona. Please note that users are not restricted to one role/persona. A single person may be a Programme Manager, a Team Manager for a team in their Programme and a developer within that team.

+

| Method | +Parameters | +Example Request Body | +Example Response | +

|---|---|---|---|

| GET | ++ | N/A | +[{ "creator":"user:default/johnDoe", "owner":"owner value", "title":"ALB 1", "alias":"ALB", "description": "ALB description", "url": null, "name":"alb-1", "id": "123", "created_at": "2024-02-26T15:58:40.337Z", "updated_at": "2024-02-26T15:58:40.337Z","updated_by": "user:default/johnDoe"}, { "creator":"user:default/johnDoe", "owner":"owner value", "title":"ALB 2", "alias":"ALB", "description": "ALB description", "url": null, "name":"alb-2", "id": "1234", "created_at": "2024-02-26T15:58:40.337Z", "updated_at": "2024-02-26T15:58:40.337Z","updated_by": "user:default/johnDoe"}] |

+

| GET | +id |

+N/A | +{ "creator":"user:default/johnDoe", "owner":"owner value", "title":"ALB 1", "alias":"ALB", "description": "ALB description", "url": null, "name":"alb-1", "id": "123", "created_at": "2024-02-26T15:58:40.337Z", "updated_at": "2024-02-26T15:58:40.337Z","updated_by": "user:default/johnDoe"} |

+

| POST | ++ | { "title": "ALB", "description": "ALB Description" } |

+{ "title": "ALB", "description": "ALB Description" , "url": null, "alias": null, "name": "alb", "creator":"user:default/johnDoe", "owner":"owner value", "id": "12345","created_at": "2024-02-26T15:58:40.337Z", "updated_at": "2024-02-26T15:58:40.337Z","updated_by": "user:default/johnDoe"} |

+

| PATCH | +id |

+{ "id": "12345", "title": "Updated ALB Title" } |

+{ "title": "Updated ALB Title", "description": "ALB Description" , "url": null, "alias": null, "name": "alb", "creator":"user:default/johnDoe", "owner":"owner value", "id": "12345","created_at": "2024-02-26T15:58:40.337Z", "updated_at": "2024-02-26T15:58:40.337Z","updated_by": "user:default/johnDoe"} |

+

| Method | +Example Response | +

|---|---|

| GET | +{"123": "ALB 1","1234": "ALB 2","12345": "ALB 3","123456": "ALB 4"} |

+

| Method | +Parameters | +Example Request Body | +Example Response | +

|---|---|---|---|

| GET | ++ | N/A | +[{ "id": "123", "programme_managers":[], "arms_length_body_id": "12345", "title": "Delivery Programme 1", "name": "delivery-programme-1", "alias": "Delivery Programme", "description": "Delivery Programme description", "finance_code": "1", "delivery_programme_code": "123", "url": "exampleurl.com" , "created_at": "2024-02-26T15:58:40.337Z", "updated_at": "2024-02-26T15:58:40.337Z","updated_by": "user:default/johnDoe"}, { "id": "1234", "programme_managers":[], "arms_length_body_id": "12345", "title": "Delivery Programme 2", "name": "delivery-programme-2", "alias": "Delivery Programme", "description": "Delivery Programme description", "finance_code": "1", "delivery_programme_code": "123", "url": "exampleurl.com" , "created_at": "2024-02-26T15:58:40.337Z", "updated_at": "2024-02-26T15:58:40.337Z","updated_by": "user:default/johnDoe"}] |

+

| GET | +id |

+N/A | +{ "id": "123", "programme_managers":[{"aad_entity_ref_id": "123", "id": "1", "delivery_programme_id" :"123", "email": "email@example.com", "name": "John Doe"}], "arms_length_body_id": "12345", "title": "Delivery Programme 1", "name": "delivery-programme-1", "alias": "Delivery Programme", "description": "Delivery Programme description", "finance_code": "1", "delivery_programme_code": "123", "url": "exampleurl.com" , "created_at": "2024-02-26T15:58:40.337Z", "updated_at": "2024-02-26T15:58:40.337Z","updated_by": "user:default/johnDoe"} |

+

| POST | ++ | { "programme_managers":[{"aad_entity_ref_id": "123"}, {"aad_entity_ref_id": "1234"}], "arms_length_body_id": "12345", "title": "Delivery Programme", "alias": "Delivery Programme", "description": "Delivery Programme description", "finance_code": "1", "delivery_programme_code": "123", "url": "exampleurl.com" } |

+{ "id": "1234", "programme_managers":[], "arms_length_body_id": "12345", "title": "Delivery Programme", "name": "delivery-programme", "alias": "Delivery Programme", "description": "Delivery Programme description", "finance_code": "1", "delivery_programme_code": "123", "url": "exampleurl.com" , "created_at": "2024-02-26T15:58:40.337Z", "updated_at": "2024-02-26T15:58:40.337Z","updated_by": "user:default/johnDoe"} |

+

| PATCH | ++ | { "id": "1234", "title": "Updated Delivery Programme Title" } |

+{ "id": "1234", "programme_managers":[], "arms_length_body_id": "12345", "title": "Updated Delivery Programme Title, "name": "delivery-programme", "alias": "Delivery Programme", "description": "Delivery Programme description", "finance_code": "1", "delivery_programme_code": "123", "url": "exampleurl.com" , "created_at": "2024-02-26T15:58:40.337Z", "updated_at": "2024-02-26T15:58:40.337Z","updated_by": "user:default/johnDoe"} |

+

| Method | +Example Response | +

|---|---|

| GET | +[{"id": "5464de88-bc76-4a0b-a491-77284c392dab","delivery_programme_id": "0bd0cb6b-569a-4c0f-bc6d-5b8708f45c4a","aad_entity_ref_id": "aad entity ref id 1" "email": "example@defra.onmicrosoft.com","name": "name 1"},{"id": "f0bca259-d0a2-4d30-8166-4569f8e7b6f2","delivery_programme_id": "0bd0cb6b-569a-4c0f-bc6d-5b8708f45c4a","aad_entity_ref_id": "aad entity ref id 2","email": "example@defra.onmicrosoft.com","name": "name 2"}] |

+

| Method | +Example Response | +

|---|---|

| GET | +{"items": [ {"metadata": { "name": "example.onmicrosoft.com", "annotations": {"graph.microsoft.com/user-id": "aad entity ref id 1","microsoft.com/email": "example@defra.onmicrosoft.com"}},"spec": {"profile": {"displayName": "name 1"}}},{"metadata": {"name": "example.onmicrosoft.com","annotations": {"graph.microsoft.com/user-id": "aad entity ref id 2","microsoft.com/email": "example@defra.onmicrosoft.com"}},"spec": {"profile": {"displayName": "name 2"}}}]} |

+

| + | + |

| Method | +Parameters | +Example Request Body | +Example Response | +

|---|---|---|---|

| GET | ++ | N/A | +[{"id": "123","name": "delivery-project-1","title": "Delivery Project 1","alias": "Delivery Project","description": "Delivery Project Description","finance_code": "","delivery_programme_id": "1","delivery_project_code": "1","url": "","ado_project": "","created_at": "2024-04-03T06:41:56.257Z","updated_at": "2024-04-03T08:42:48.242Z","updated_by": "user:default/johnDoe.com"}, {"id": "1234","name": "delivery-project-2","title": "Delivery Project 2","alias": "Delivery Project","description": "Delivery Project Description", "finance_code": "", "delivery_programme_id": "2", "delivery_project_code": "2", "url": "", "ado_project": "", "created_at": "2024-04-03T05:42:31.914Z", "updated_at": "2024-04-03T08:43:03.622Z","updated_by": "user:default/johnDoe"}] |

+

| GET | +id |

+N/A | +{"id": "1234","name": "delivery-project-2","title": "Delivery Project 2","alias": "Delivery Project","description": "Delivery Project Description", "finance_code": "", "delivery_programme_id": "2", "delivery_project_code": "2", "url": "", "ado_project": "", "created_at": "2024-04-03T05:42:31.914Z", "updated_at": "2024-04-03T08:43:03.622Z","updated_by": "user:default/johnDoe"} |

+

| POST | ++ | "title": "Delivery Project 3","alias": "Delivery Project","description": "Delivery Project Description", "finance_code": "", "delivery_programme_id": "3", "delivery_project_code": "3", "url": "", "ado_project": ""} |

+{"id": "12345","name": "delivery-project-3","title": "Delivery Project 3","alias": "Delivery Project","description": "Delivery Project Description", "finance_code": "", "delivery_programme_id": "3", "delivery_project_code": "3", "url": "", "ado_project": "", "created_at": "2024-04-03T05:42:31.914Z", "updated_at": "2024-04-03T08:43:03.622Z","updated_by": "user:default/johnDoe"} |

+

| PATCH | ++ | { "id": "12345", "title": "Updated Delivery Project Title" } |

+{"id": "12345","name": "delivery-project-3","title": "Updated Delivery Project Title","alias": "Delivery Project","description": "Delivery Project Description", "finance_code": "", "delivery_programme_id": "3", "delivery_project_code": "3", "url": "", "ado_project": "", "created_at": "2024-04-03T05:42:31.914Z", "updated_at": "2024-04-03T08:43:03.622Z","updated_by": "user:default/johnDoe"} |

+

We can extend Backstage's functionality by creating and installing plugins. Plugins can either be created by us (1st party) or we can install 3rd party plugins. The majority of 3rd party plugins are free and open source, however there are some exceptions.

+This page tracks the plugins we have installed and the plugins we would like to evaluate.

+| Plugin | +Category | +Status | +Author | +Description | +

|---|---|---|---|---|

| azure-devops | +Catalog | +Implemented | +Backstage | +Displays Pipeline runs on component entity pages. We're not using the repos or README features. Requires components to have two annotations -dev.azure.com/project contains the ADO project name and dev.azure.com/build-definition contains the pipeline name. |

+

| GitHub pull requests | +Catalog | +Implemented | +Roadie | +Adds a dashboard displaying GitHub pull requests on component entity pages. Requires components to have the github.com/project-slug in their catalog-info file. |

+

| Grafana dashboard | +Catalog | +Implemented | +K-Phoen | +Displays Grafana alerts and dashboards for a component. Note that we cannot use the Dashboard embed - Managed Grafana does not allow us to configure embedding. | +

| Azure DevOps scaffolder actions | +Scaffolder | +Implemented | +ADP | +Custom scaffolder actions to get service connections, create and run pipelines, and permit access to ADO resources. Loosely based on the3rd party package by Parfumerie Douglas. | +

| GitHub scaffolder actions | +Scaffolder | +Implemented | +ADP | +Custom scaffolder actions to create GitHub teams and assign to repositories. | +

| Lighthouse | +Catalog | +Agreed | +Spotify | +Generates on-demand Lighthouse audits and tracks trends directly in Backstage. Helps to improve accessibility, performance and adhere to best practices. Requires PostgreSQL database and a running Lighthouse instance of thelight-house-audit-service API which executes the tests before sending results back to the plugin. | +

| SonarQube | +Catalog | +Agreed | +SDA-SE | +Adds frontend visualisation of code statistics from SonarCloud or SonarQube. Requires SonarCloud subscription | +

| Prometheus | +Catalog | +Agreed | +Roadie | +Adds Embedded Prometheus Graphs and Alerts into backstage. Requires setting up a new proxy endpoint for the Prometheus API in the app-config.yaml |

+

| Flux | +Catalog | +Agreed | +Weaveworks | +The Flux plugin for Backstage provides views of Flux resources available in Kubernetes clusters. | +

| Kubernetes | +Catalog | +Agreed | +Spotify | +Kubernetes in Backstage is a tool that's designed around the needs of service owners, not cluster admins. Now developers can easily check the health of their services no matter how or where those services are deployed — whether it's on a local host for testing or in production on dozens of clusters around the world. | +

| Snyk | +Catalog | +Assess | +Synk | +Snyk Backstage plugin leverages the Snyk API to enable Snyk data to be visualized directly in Backstage. | +

| KubeCost | +Catalog | +Assess | +SuXess-IT | +Kubecost is a plugin to help engineers get information about cost usage/prediction of their deployment. Some development work needed around namespaces. It doesn’t look regularly maintained or updated regularly | +

| Status | +Description | +

|---|---|

| Assess | +Suggestions that we need to evaluate before accepting them into the backlog. | +

| Agreed | +Discussed and agreed to accept it, but more work needed to flesh out details. | +

| Accepted | +The plugin is suitable for our portal and a story for installing it as been added to the backlog. | +

| Implemented | +The plugin has been implemented. | +

| Rejected | +The plugin is unsuitable for the portal and we won't be installing it. | +

| Category | +Description | +

|---|---|

| Catalog | +The plugin extends the software catalog, e.g. through a card, or full page dashboard. | +

| Scaffolder | +The plugin adds custom actions to the component scaffolder. | +

The ADP Portal is built on Backstage, an open-source platform for building developer portals. Backstage is a Node application which contains a backend API and React based front end. This page outlines the steps you need to follow to set up and run Backstage locally.

+[[TOC]]

+Backstage has a few requirements to be able to run. These are detailed in the Backstage documentation, with some requirements detailed below.

+Backstage requires a UNIX environment. If you're using Linux or a Mac you can skip this section, but if you're on a Windows machine you will need to install WSL.

+WSL can either be installed via the command line (follow Microsoft's instructions) or from the Microsoft Store. You will then need to install a Linux distribution. Ubuntu is recommended; either download from the Microsoft Store or run wsl --install -d Ubuntu-22.04 in your terminal.

Familiarise yourself with:

+++⚠️ Everything you do with Backstage from this point forwards must be done in your WSL environment. Don't attempt to run Backstage from your Windows environment - it won't work!

+

You will need either Node 16 or 18 to run Backstage. It will not run on Node 20.

+The recommended way to use the correct Node version is to use nvm.

+++⚠️ If on a PC make sure you install and configure nvm in your WSL environment.

+

You will then need to install Yarn globally. Run npm install --global yarn in your WSL environment.

Make sure you've got Git configured. If on WSL follow the steps to make sure you've got Git configured correctly - your settings from Windows will not carry over.

+Ensure you have a GPG key set up so that you can sign your commits. See the guide on verifying Git signatures. If you have already set up a GPG key on Windows this will need to be exported and then imported in to your WSL environment.

+To export on Windows using Kleopatra, see here. To import using gpg on WSL, see here.

+If installing WSL for the first time you will likely need to install the build-essential package. Run sudo apt install build-essential.

Check if you have the Azure CLI installed in your WSL environment. Run az --version. If this returns an error you need to install the Azure CLI: curl -sL https://aka.ms/InstallAzureCLIDeb | sudo bash. See Install the Azure CLI on Linux.

We have integrated Backstage with Azure AD for authentication. For this to work you will need to sign in to the O365_DEFRADEV tenant via the Azure CLI.

+After installing and configuring pre-requisites we can clone the adp-portal repo, configure Backstage, and run the application.

+++⚠️ Remember, if on Windows these steps must be followed in your WSL environment.

+

If you haven't already, create a folder in your Home directory where you will can clone your repos.

+Clone the adp-portal repo into your projects folder.

+Client IDs, secrets, etc for integrations with 3rd parties are read from environment variables. In the root of a repo there is a file named env.example.sh. Duplicate this file and rename it to env.sh.

+A senior developer will be able to provide you with the values for this file.

+A private key is also required for the GitHub app. Again, a senior developer will be able to provide you with this key.

+++ℹ️ Later on down the line we are hoping to move these environment variables to Key Vault

+

To load the environment variables in to your terminal session run . ./env.sh. Make sure you include both periods - the first ensures that the environment variables are loaded into the correct context.

The application needs to be run from the /app folder - run cd app if you're in the root of the project.

Run the following two commands to install dependencies, and build and run the application:

+ +To stop the application, press <kbd>Ctrl </kbd>+<kbd>C </kbd> twice.

If you have issues starting Backstage, check the output in your terminal. Common errors are below:

+"Backend failed to start up Error: Invalid Azure integration config for dev.azure.com: credential at position 1 is not a valid credential" - Have you loaded your environment variables? Run . ./env.sh from the root of the repo, then try running the application again.

"MicrosoftGraphOrgEntityProvider:default refresh failed, AggregateAuthenticationError: ChainedTokenCredential authentication failed" - have you logged in to the Azure CLI? Run az login and make sure you sign in to the O365_DEFRADEV tenant. Try running the application again.

Catalog data is pulled in from multiple sources which are configured in the app-config.yaml file. Individual entities are defined by a YAML file.

Backstage regularly scans the DEFRA GitHub organisation for repos containing a catalog-info.yaml file in the root of the master branch. The FFC demo services contain examples of this file (see ffc-demo-web). New components scaffolded through Backstage will be contain this file (but it may need further customisation), existing components will need to have the file added in manually.

A catalog-info.yaml for a component file might look like this:

The Backstage documentation describes the format of this file - it is similar to a Kubernetes object config file. The key properties we need to set are:

+github.com/project-slug is used to pull data from the specified project into the Pull Requests dashboard; the dev.azure.com annotations pull pipeline runs into the CI/CD dashboard; sonarqube.org/project-key pulls in Sonarcloud metrics for the specified project.frontend (for a web application) and backend (for an API or backend service).If a component consumes infrastructure such as a database or service bus queue then that must also be defined alongside the component. Multiple entities can be defined in a single file by using a triple dash --- to separate them.

The minimum permissions that we requires for our ADP GitHub App are:

+Please note:

+Repository permissions permit access to repositories and related resources.

+Repository creation, deletion, settings, teams, and collaborators.

+Repository contents, commits, branches, downloads, releases, and merges.

+Search repositories, list collaborators and access repository metadata.

+Pull requests and related comments, assignees, labels, milestones and merges.

+Organisation permissions permit access to organisation related resources.

+None required at this time

+These permissions are granted on an individual user basis as part of the User authorization flow.

+None required at this time

+GitHub Apps can request almost any permission from the list of API actions supported by GitHub Apps.

+Possible Remediations:

+Leaking or misplaced GitHub App credentials.

+Possible Remediation

+Answer: +Yes, please see permission break down above with the reasons why we require them.

+“It's not possible to have multiple Backstage GitHub Apps installed in the same GitHub organisation, to be handled by Backstage. We currently don't check through all the registered GitHub Apps to see which ones are installed for a particular repository. We only respect global organisation installs right now.”

+Answer: +Should be be able to have multiple instances of backstage within the same GitHub organisation. There may be possible conflicts that may occur with certain backstage plugins. For example, GitHub Discovery search for a catalog-info.yaml are repositories to allow for automatic registering of entities. If backstage 1 and backstage 2 use the defaults GitHub Discovery provide configuration will be pick up the same files as each other. To resolve this it will would be as simple as changing config to find yaml(s) file of different names or paths in backstage 1 or 2. There is a second option which would be to restrict what repositories the GitHub Application has access to.

+To aid in remediating this concern, will be change the config where we can to add an "adp" suffix. For example, "adp-catalog-info.yaml".

+We are not using the web hook at the moment but we may look to support GitHub events in future (Documentation).

+The ADP portal is built on Backstage, using React and Typescript on the frontend. This page outlines, the steps taken to incorporate the GOV.UK branding into the ADP Portal.

+Backstage allows the customization of the themes to a certain extent, for example the font family, colours, logos and icons can be changed following the tutorials. All of the theme changes have been made within the App.tsx file.

+In order to install GOV.UK Frontend you need to meet the some requirements:

+Once those are successfully installed you can run the following in your terminal within the adp-portal/app/packages/app folder:

+yarn install govuk-frontend --save

In order to import the GOV.UK styles, two lines of code have been added within the style.module.scss file:

+$govuk-assets-path: "~govuk-frontend/govuk/assets/"; // this creates a path to the fonts and images of GOV.UK assets.

+@import "~govuk-frontend/govuk/all"; // this imports all of the styles which enables the use of colours and typography.

The colour scheme is applied through exporting the GOV.UK colours as variables within the style.module.scss file into the Backstage Themes created. Currently there are a few colours that are being used however more variables can be added within the scss file and can be imported within other files. To import the scss file with the styles variables this statement is used in the App.tsx file:

+import styles from 'style-loader!css-loader?{"modules": {"auto": true}}!sass-loader?{"sassOptions": {"quietDeps": true}}!./style.module.scss';

++This import statement enables the scss file to load and process.

+

The style variables then were used within the custom Backstage themes:

+The font used within the ADP Portal is GDS Transport as the portal will be on the gov.uk domain.

+To get this working within the style.module.scss file the fonts were imported through assigning it to a scss variable called govuk-assets-path-font-woff2 and govuk-assets-path-font-woff:

+++As recommended we are serving the assets from the GOV.UK assets folder so that the style stays up to date when there is an update to the GOV.UK frontend.

+

Then this variable was parsed into the url of the font-face element:

+To customize the font of the backstage theme, the scss was imported (check the colour scheme section) and used within the fontFamily element of the createUnifiedTheme function:

+The Logo of the ADP Portal was changed by updating the two files within the src/components/Roots folder.

+Both DEFRA logos are imported as png and saved within the Root folder.

+ + + + + + + + + + + + + + + + +A brief description of changes being made +Link to the relevant work items: e.g: Relates to ADO Work Item AB#213700 and builds on #3376 (link to ADO Build ID URL)

+Any specific actions or notes on review?

+Any relevant testing information and pipeline runs.

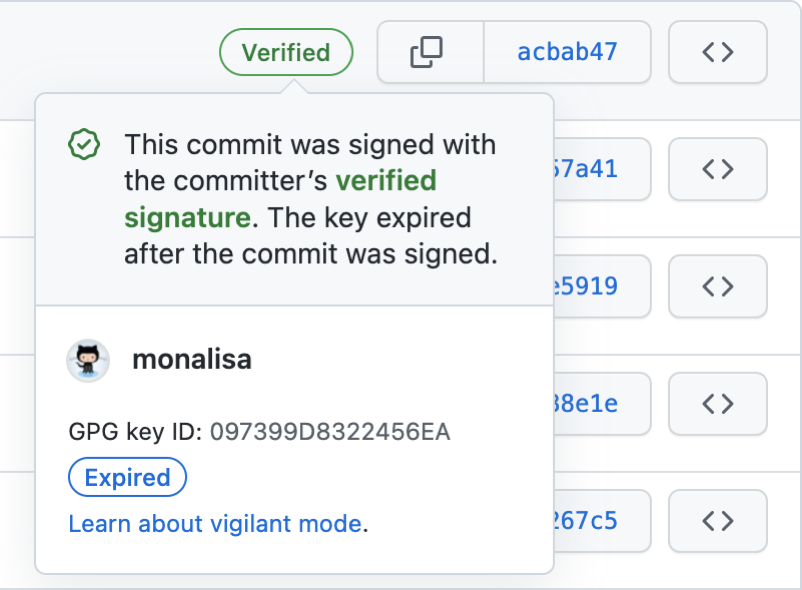

+{work item number}: {title}The project's branch policy is configured to necessitate the use of Git signed commits for any merging activities. This policy serves a twofold purpose: firstly, it validates the authenticity of changes and acts as a barrier against unauthorised or malevolent alterations to the codebase.

+Secondly, it provides assurance of code integrity by demonstrating that changes have remained unaltered throughout transit and subsequent commits. During the evaluation of pull requests or merge requests, the presence of signed commits also offers a reliable means to confirm that the proposed changes have been authored by authorised contributors, thereby reducing the likelihood of unintentionally accepting unauthorised code.

+To use signed commits, developers must generate a GPG (GNU Privacy Guard) key pair, which includes a private key kept secret and a public key that is shared. Commits are then signed using the private key, and others can verify the commits using the corresponding public key.

+

Please refer the following link. Please make sure the email you enter in step 8 is your github email account +https://docs.github.com/en/authentication/managing-commit-signature-verification/generating-a-new-gpg-key

+

https://docs.github.com/en/authentication/managing-commit-signature-verification

+This documentation is to capture the existing design of demo/exemplar services in FFC platform. This will provide an overview of the components in the demo services and application flow.

+The demo service contains 6 containerized microservices orchestrated with Kubernetes. The purpose of these services are to prove the platform capability to provision the infrastructure required for developing a digital service along with CI/CD pipelines with minimal effort. This in turn allows the developers to focus on the core business logic.

+Below are the demo services that are present at the moment.

+| Service | +Dev Platform | +Git Repo | +

|---|---|---|

| Payments Service | +Node.Js | +https://github.com/DEFRA/ffc-demo-payment-service | +

| Payments Service Core | +Asp.Net Core | +https://github.com/DEFRA/ffc-demo-payment-service-core | +

| Payments Web | +Node.Js | +https://github.com/DEFRA/ffc-demo-payment-web | +

| Claim Service | +Node.Js | +https://github.com/DEFRA/ffc-demo-claim-service | +

| Calculation Service | +Node.Js | +https://github.com/DEFRA/ffc-demo-calculation-service | +

| Collector Service | +Node.Js | +https://github.com/DEFRA/ffc-demo-collector | +

| Demo Web | +Node.Js | +https://github.com/DEFRA/ffc-demo-web | +

+

+

| + | Code | +Docker Compose | +Dev | +Test | +Pre-production | +

|---|---|---|---|---|---|

| Lint/Audit | +X | ++ | + | + | + |

| Synk Test | +X | ++ | + | + | + |

| Static Code Analysis/ SonarCloud | +X | ++ | + | + | + |

| Functionional/ BDD | ++ | X | +X | ++ | + |

| Intergration Tests/ Contract testing using pact broker | ++ | + | X | +X | ++ |

| Performance Testing (JMeter) | ++ | + | + | + | X | +

| Pen Testing (OWASP ZAP) | ++ | X | ++ | + | X | +

Azure Policy extends Gatekeeper v3, an admission controller webhook for Open Policy Agent (OPA), to apply at-scale enforcements and safeguards on your clusters in a centralized, consistent manner. Azure Policy makes it possible to manage and report on the compliance state of your Kubernetes clusters from one place. The add-on enacts the following functions:

+Azure Policy for Kubernetes supports the following cluster environments:

+https://learn.microsoft.com/en-us/azure/governance/policy/concepts/policy-for-kubernetes +https://learn.microsoft.com/en-us/azure/container-apps/dapr-overview?tabs=bicep1%2Cyaml

+TODO

+This page is a work in progress and will be updated in due course.

+Add to details about each service.

+This getting started guide summarises the steps for onboarding a delivery programme onto ADP via the Portal. It also provides an overview of the automated processes involved.

+Before onboarding a delivery programme you will first need to ensure that:

+By completing the steps in this guide you will be able to:

+Once you have navigated to the 'ADP Data' page you will be presented with the 'Delivery Programmes' option.

+ +By clicking 'View' you will have the ability to view existing Delivery Programmes and add new ones if you have the admin permissions.

+

+By clicking 'View' you will have the ability to view existing Delivery Programmes and add new ones if you have the admin permissions.

+

You can start entering Delivery Programme information by clicking the 'Add Delivery Programme' button.

+ +You will be presented with various fields; some are optional. For example, the 'Finance Code', 'Website', and 'Alias' are not required, and you can add them later if you wish.

+You will be presented with various fields; some are optional. For example, the 'Finance Code', 'Website', and 'Alias' are not required, and you can add them later if you wish.

If the Arms Length Body (ALB) for your programme has already been created it will appear in the Arms Length Body dropdown and you will be able to select it accordingly. The programme managers' dropdown should also be pre-populated, and you are able to select more than one manager.

+This form includes validation. Once you have completed inputting the Delivery Programme Information and pressed 'create', the validation will run to check if any changes need to be made to your inputs.

+Once you have created your Delivery Programme, you will automatically be redirected to the view page which will allow you to look through existing programmes and edit them.

+

This getting started guide summarises the steps for onboarding a delivery project onto ADP via the Portal. It also provides an overview of the automated processes involved.

+Before onboarding a delivery project you will first need to ensure that:

+By completing this guide you will have completed these actions:

+Once you have navigated to the 'ADP Data' page you will be presented with the 'Delivery Projects' option.

+ +By clicking 'View' you will have the ability to view existing Delivery Projects and add new ones if you have the admin permissions.

+

+By clicking 'View' you will have the ability to view existing Delivery Projects and add new ones if you have the admin permissions.

+

You can start entering Delivery Projects information by clicking the 'Add Delivery Projects' button.

+ +You will be presented with various fields; some are optional. For example, the 'Alias', 'Website', 'Finance Code' and 'ADO Project' are not required, and you can add them later if you wish.

+You will be presented with various fields; some are optional. For example, the 'Alias', 'Website', 'Finance Code' and 'ADO Project' are not required, and you can add them later if you wish.

If the Delivery Programme for your project has already been created it will appear in the Delivery Programme dropdown, and you will be able to select it accordingly.

+This form includes validation. Once you have completed inputting the Delivery Project Information and pressed 'create', the validation will run to check if any changes need to be made to your inputs.

+...

+...

+...

+...

+Once you have created your Delivery Project, you will automatically be redirected to the view page which will allow you to look through existing projects and edit them.

+

This getting started guide summarises the steps for onboarding a user onto your delivery project in ADP. It also provides an overview of the automated processes involved.

+Before onboarding a user on to your delivery project you will first need to ensure that:

+By completing this guide you will have completed these actions:

+....

+...

+PostgreSQL is the preferred relational database for microservices. This guide describes the process for creating a database for a microservice and configuring the microservice to use it.

+Note

+The ADP Platform currently supports PostgreSQL only as the option available for a relational database.

+There are two ways for creating a Postgres Database in the ADP.

+When scaffolding a new Backend service using the ADP Portal. You have the option to specify the name of the database. Refer to the section on Selecting a template

+For an existing service, you can add values to the Infrastructure Helm Chart values. Refer to Infrastructure section on Database for Flexible Server

+Tip

+An example of how to specify the Helm Chart values is provided in the ffc-demo-claim-service repository, refer to the configuration in the values.yaml.

+The ADP Platform CI and deployment pipelines support database migrations using Liquibase.

+Create a Liquibase changelog defining the structure of your database available from the root of your microservice repository in changelog/db.changelog.xml.

Guidance on creating a Liquibase changelog is outside of the scope of this guide.

+Update docker-compose.yaml, docker-compose.override.yaml, and docker-compose.test.yaml to include a Postgres service and add Postgres environment variables to the microservice.

Replace <programme code> and <service> as per naming convention described above.

The following scripts and files are scaffolded as part of your backend service to provide a good local development experience.

+docker-compose.migrate.yaml in the root of your microservice repository that spins up Postgres in a Docker container.scripts/ folder contains three bash scripts start, test and postgres-wait.scripts/migration/ folder contains two scripts to apply and remove migrations. The two scripts are database-up and database-up.Execute the start script to start the Postgres container.

Some microservices require Postgres extensions to be installed in the database. Below is the list of the enabled extensions:

+This is a two step process.

+Tip

+Request the ADP Platform Team to enable the extension on the Postgres Flexible server if it is not in the list above of enabled extensions.

+When scripting the database migrations for creating extensions, use IF NOT EXISTS. This will ensure that the scripts can both run locally and an in Azure.

When running the Postgres database locally in Docker, you will have sufficient permissions to create the extensions. However, in Azure, the ADP Platform will apply the migrations to the database instead of using the microservice's managed identity. If you don't use IF NOT EXISTS, the migrations on the Azure Postgres database will fail due to insufficient permissions.

Below is an example of a SQL script you can use in your migration to enable an extension.

+ + + + + + + + + + + + + + + + + +In this how to guide you will learn how to create a new Platform service on ADP for your delivery project and team. You will also learn what automated actions will take place, and any areas of support that may be needed.

+Before creating Platform business service (microservice), you will first need to ensure that you have:

+Note

+Please contact the ADP Platform Engineering team for support if you don’t have, and cannot setup/configure, these prerequisites.

+By completing this guide, you will have completed these actions:

+The following areas require the support of the ADP Platform Team for your service initial setup:

+Note

+The initial domain (Frontend service or external API URL) creation is currently done via the Platform team pipelines. Please contact the Platform team to create this per environment once the service is scaffolded.

+Tip

+You can choose a Node.js for Frontends, or for Backends and APIs in Node.Js or C#.

+Enter the properties describing your component/service:

+{programme}-{project}-{service}. For example, fcp-grants-web.To encourage coding in the open the repository will be public by default. Refer to the GDS service manual for more information. You can select a ‘private’ repository by selecting the ‘private repo’ flag in GitHub.

+The scaffolder will create a new repository and an associated team with ‘Write’ permissions:

+CI/CD pipelines will be created in Azure DevOps:

+DefraGovUK and not changeable.Now you have reviewed and confirmed your details, your new Platform service will be created! It will complete the actions detailed in the overview section. Once this process completes, you will be given links to your new GitHub repository, the Portal Catalog location, and your Pipelines. You now have an ADP business service!

+

We use HELM Charts to deploy, manage and update Platform service applications and their dedicated and associated infrastructure. This is ‘self-service’ managed by the platform development teams/tenants. We use Azure Bicep/PowerShell for all other Azure infrastructure and Entra ID configuration, including Platform shared and ‘core’ infrastructure. This is managed by the ADP Platform Engineers team. An Azure Managed Identity (Workload ID) will be automatically created for every service (microservice app) for your usage (i.e. assigning RBAC roles to it).

+The creation of infrastructure dedicated for your business service/application is done via your microservice HELM Chart in your repository, and deployed by your Service CI/CD pipeline that you created earlier. A ‘helm’ folder will be created in every scaffolded service with 2 subfolders. The one ending with ‘-infra’ is where you define your service’s infrastructure requirements in a simple YAML format.

+Note

+The full list of supported ‘self-service’ infrastructure can be found in the ADP ASO Helm Library Documentation on GitHub with instructions on how to use it.

+Image below is an example of how-to self-service create additional infrastructure by updating the HELM charts ‘values.yaml’ file with what you require to be deployed:

+

Warning

+Please contact the ADP Platform Engineers Team if you require any support after reading the provided documentation or if you’re stuck.

+A system is a label used to group together multiple related services. This label is recognized and used by backstage in order to make it clear what services interact with eachother. They are a concept which is provided by backstage out of the box, and is documented by them here

+In order to create a system, you simply need to add a new definition for it to the ADP software templates repository. There is an example system to show the format that should be used. Once this system is added, you need to add a link to it from the all.yaml file. You will also need to choose a name for your system, which should be in the format {delivery-project-id}-{system-name}-system e.g. fcp-demo-example-system.

Once the system has been added and the all.yaml file has been updated, you will need to wait for the ADP portal to re-scan the repository which happens every hour. If you need the system to be available sooner than that, then an ADP admin can trigger a refresh at any time by requesting a refresh of the project-systems location.

all.yaml file¶The all.yaml file is what tells the ADP portal where to find the systems, and so every file containing a definition for a system must be pointed to by this file. To point to a new file, you will need to add a new entry to the targets array which should be the relative path from the all.yaml file to your new system file.

all.yaml

+

{system}.yaml file¶Your system will actually be defined inside its own .yaml file. The name of this file should be the name of the system you are creating to make it easier to track which system is defined where. The format of this file should follow this example:

my-system.yaml

+

In this how to guide you will learn how to build, deploy, and monitor a Platform service (Web App, User Interface, API etc) for your team. It includes information about Pipelines specifically and how the ADP Backstage Portal supports this.

+Before building and deploying a service, you will first need to ensure that:

+ +By completing this guide, you will have completed these actions:

+All pipelines in ADP are created in your projects/programmes Azure DevOps project. This is specific to your team. It’s the one you chose on your scaffolder creation of a service. We use YAML Azure Pipelines and Defra GitHub to store all code. +Pipelines are mapped 1-1 per microservice, and can deploy the Web App, Infra, App Configuration and Database schema together as an immutable unit.

+In your scaffolded repository:

+<your-service-name>. <projectcode>-<servicename>-api

Above image an example of a Pipeline scaffolded called ‘adp-demo99’ in the DEMO folder.

+Can I find this in the ADP Portal?

+Yes! Simply go to your components page that you scaffolded/created via the ADP Portal, and click on the CI/CD tab, which will give you information on your pipeline, and will link off to the exact location.

+We promote continuous integration (CI) and continuous delivery (CD). Your pipeline will trigger (run the CI build) automatically on any change to the ‘main’ branch, or any feature branch you create and anytime you check-in. This includes PR branches. You simply run your pipeline from the ADO Pipelines interface by clicking ‘Run pipeline’.

+You can:

+Pipeline documentation and parameters and configuration options can be found here.

+

Above image of pipeline run example.

+You can change some basic functionality of your pipeline. A lot of it is defined for you in a convention-based manner, including the running of unit tests, reporting, environments that are available etc, and some are selectable, such as build of .NET or NodeJS apps, location of test files, PR and CI triggers, and the parameters to deploy configuration only or automatic deploy on every feature build.

+Full details can be found on the Pipelines documentation GitHub page.

+

Above image is an example of what can be changed in terms of Pipeline Parameters (triggers, deployment types, paths to include/exclude).

+The below image is an example of what can be changed. You can change things like your config locations, test paths, what ADO Secret variable groups you wish to import, what App Framework (Node or C#) etc.

+

To promote your code through environments, you can use the Azure Pipelines user interface for your team/project to either:

+Your environments and any default gates or checks will be automatically plotted for you. This is an example of a full pipeline run. You can select, based on the Platform route-to-live documentation, which environments you promote code to. You don’t need to go to all environments to go live.

+

This is an example of a waiting ‘stage’ which is an environment:

+

To promote code, you can select ‘Review’ in the top-right hand corner and click approve.

+Full Azure Pipelines documentation can be found here.

+Every pipeline run includes steps such as unit tests, integration tests, acceptance tests, app builds, code linting, static code analysis including Sonar Cloud, OWASP checks, performance testing capability, container/app scanning with Snyk etc.

+We report out metrics in Azure DevOps Pipelines user interface for your project and service, for things like Unit Test coverage, test passes and failures, and any step failures. Full details are covered in Pipelines documentation. Details can also be found in your projects Snyk or Sonar Cloud report.

+From the ADP Backstage Portal, you can find the following information for all environments:

+The portal is fully self-service. And each component deployed details the above. You should use the ADP Portal to monitor, manage and view data about your service if it isn’t included in your Pipeline run.

+ + + + + + + + + + + + + + + + +In this how to guide you will learn how to create, deploy, and run an acceptance test for a Platform service (Frontend Web App or an API) for your team.

+Before adding acceptance tests for your service, you will need to ensure that:

+ +By completing this guide, you will have completed these actions:

+These tests may include unit, integration, acceptance, performance, accessibilty etc as long as they are defined for the service.

+Note

+The pipeline will check for the existence of the file test\acceptance\docker-compose.yaml to determine if acceptance tests have been defined.

You may add tags to features and scenarios. There are no restrictions on the name of the tag. Recommended tags include the following: @sanity, @smoke, @regression +refer

+If custom tags are defined, then the pipeline should be customized to run those tests as detailed in following sections.

+$ENV:TEST_TAGS = "@sanity or @smoke"export TEST_TAGS = "@sanity or @smoke"Note

+Every pipeline run includes steps to run various post deployment tests. +These tests may include unit, integration, acceptance, performance, accessibilty etc as long as they are defined for the service.

+You can customize the tags and environments where you would like to run specific features or scenarios of acceptance test

+If not defined, the pipeline will run with following default settings.

+Please refer ffc-demo-web pipeline:

+Test execution reports will be available via Azure DevOps Pipelines user interface for your project and service.

+ + + + + + + + + + + + + + + + +In this how to guide you will learn how to create, deploy, and run a performance test for a Platform service (Web App, User Interface etc) for your team.

+Before adding acceptance tests for your service, you will need to ensure that:

+ +By completing this guide, you will have completed these actions:

+Note

+Every pipeline run includes steps to run varoious tests pre deployment and post deployment. These tests may include unit, integration, acceptance, performance, accessibilty etc as long as they are defined for the service.

+The pipeline will check for the existence of the file test\performance\docker-compose.jmeter.yaml to determine if performance tests have been defined.

The Performance Test scripts should be added to the test\performance folder in the GitHub repository of the service. Refer to the ffc-demo-web example. This folder should contain a docker-compose.jmeter.yaml file is used to build up the docker containers required to execute the tests. As a minimum, this will create a JMeter container and optionally create Selenium Grid containers. Using BrowserStack is preferred to running the tests using Selenium Grid hosted in Docker containers because you get better performance and scalability as the test load increases.

Executre the above commands in bash or PowerShell

+You can modify the number of virtual users, loop count and ramp-up duration by changing the settings in the file perf-test.properties.

+You can then reference these variables in your JMeter Script.

+

You can customize the environments where you would like to run specific features or scenarios of performance test

+ +if not defined, the pipeline will run with following default settings

+Details on migrating your existing project and its services to ADP.

+ADP Portal Setup:

+For each of your platform services you now need to migrate them over to ADP and create the needed infrastructure to support them. Link to guide.

+Once all services/ infrastructure are created and verified in SND3 (O365_DefraDev), will be begin the process of pushing the services/ infrastructure to environment in the DEFRA tenant, DEV1, TST½, and PRE1. Once deployment is complete and tested in lower we will be able to progress to PRD1 ensure that the DEFRA release management progress is adhered to.

+Developers of the delivery project actively learning and using the platform to develop new features.